About alignment files (BAM and CRAM)

Answer:

All our alignment files are in BAM or CRAM format. BAM is a standard alignment format which was defined by the 1000 Genomes consortium and has since seen wide community adoption, whereas CRAM is a compressed version of this. This compression is driven by the reference the sequence data is aligned to.

The CRAM file format was designed by the EBI to reduce the disk footprint of alignment data in these days of ever-increasing data volumes.

The CRAM files the 1000 Genomes project distributes are lossy cram files which reduce the base quality scores using the Illumina 8-bin compression scheme as described in the lossy compression section on the cram usage page

There is a github page where the format of CRAM file is discussed and help can be found.

CRAM files can be read using many Picard tools and work is being done to ensure samtools can also read the file format natively.

BAM file names

The bam file names look like:

NA00000.location.platform.population.analysis_group.YYYYMMDD.bam

The bai index and bas statistics files are also named in the same way.

The name includes the individual sample ID, where the sequence is mapped to, if the file has only contains mapping to a particular chromosome that is what the name contains otherwise, mapped means the whole genome mapping and unmapped means the reads which failed to map to the reference (pairs where one mate mapped and the other didn’t stay in the mapped file), the sequencing platform, the ethnicity of the sample using our three letter population code, the sequencing strategy. The date matches the date of the sequence used to build the bams and can also be found in the sequence.index filename.

Unmapped bams

The unmapped bams contain all the reads for the given individual which could not be placed on the reference genome. It contains no mapping information

Please note that any paired end sequence where one end successfully maps but the other does not both reads are found in the mapped bam

Bas files

Bas files are statistics we generate for our alignment files which we distribute alongside our alignment files.

These are readgroup level statistics in a tab delimited manner and are described in this README

Each mapped and unmapped bam file has an associated bas file and we provide them collected together into a single file in the alignment_indices directory, dated to match the alignment release.

Related questions:

About FASTQ sequence read files

Answer:

Our sequence files are distributed in FASTQ format. Some are hosted on our own FTP site and some by the sequence read archive.

Format

We use Sanger style phred scaled quality encoding.

The files are all gzipped compressed and the format looks like this, with a four-line repeating pattern

@ERR059938.60 HS9_6783:8:2304:19291:186369#7/2

GTCTCCGGGGGCTGGGGGAACCAGGGGTTCCCACCAACCACCCTCACTCAGCCTTTTCCCTCCAGGCATCTCTGGGAAAGGACATGGGGCTGGTGCGGGG

+

7?CIGJB:D:-F7LA:GI9FDHBIJ7,GHGJBKHNI7IN,EML8IFIA7HN7J6,L6686LCJE?JKA6G7AK6GK5C6@6IK+++?5+=<;227*6054

Files for each individual

Many of our individuals have multiple fastq files. This is because many of our individual were sequenced using more than one run of a sequencing machine.

Each set of files named like ERR001268_1.filt.fastq.gz, ERR001268_2.filt.fastq.gz and ERR001268.filt.fastq.gz represent all the sequence from a sequencing run.

The labels with _1 and _2 represent paired-end files; mate1 is found in a file labelled _1 and mate2 is found in the file labelled _2. The files which do not have a number in their name are singled ended reads, this can be for two reasons, some sequencing early in the project was singled ended, also, as we filter our fastq files as described in our README if one of a pair of reads gets rejected the other read gets placed in the single file.

When a individual has many files with different run accessions (e.g ERR001268), this means it was sequenced multiple times. This can either be for the same experiment, some centres used multiplexing to have better control over their coverage levels for the low coverage sequencing, or because it was sequenced using different protocols or on different platforms.

For a full description of the sequencing conducted for the project please look at our sequence.index file

Related questions:

About index files

Answer:

We describe our sequence meta data in sequence index files. The index for data from the 1000 Genomes Project can be found in the 1000 Genomes data collection directory. Additional indices are present for data in other data collections. Our old index files which describe the data used in the main project can be found in the historical_data directory

Sequence index files are tab delimited files and frequently contain these columns:

| Column | Title | Description |

|---|---|---|

| 1 | FASTQ_FILE | path to fastq file on ftp site or ENA ftp site |

| 2 | MD5 | md5sum of file |

| 3 | RUN_ID | SRA/ERA run accession |

| 4 | STUDY_ID | SRA/ERA study accession |

| 5 | STUDY_NAME | Name of study |

| 6 | CENTER_NAME | Submission centre name |

| 7 | SUBMISSION_ID | SRA/ERA submission accession |

| 8 | SUBMISSION_DATE | Date sequence submitted, YYYY-MM-DD |

| 9 | SAMPLE_ID | SRA/ERA sample accession |

| 10 | SAMPLE_NAME | Sample name |

| 11 | POPULATION | Sample population, this is a 3 letter code as defined in README_populations.md |

| 12 | EXPERIMENT_ID | Experiment accession |

| 13 | INSTRUMENT_PLATFORM | Type of sequencing machine |

| 14 | INSTRUMENT_MODEL | Model of sequencing machine |

| 15 | LIBRARY_NAME | Library name |

| 16 | RUN_NAME | Name of machine run |

| 17 | RUN_BLOCK_NAME | Name of machine run sector (This is no longer recorded so this column is entirely null, it was left in so as not to disrupt existing sequence index parsers) |

| 18 | INSERT_SIZE | Submitter specified insert size |

| 19 | LIBRARY_LAYOUT | Library layout, this can be either PAIRED or SINGLE |

| 20 | PAIRED_FASTQ | Name of mate pair file if exists (Runs with failed mates will have a library layout of PAIRED but no paired fastq file) |

| 21 | WITHDRAWN | 0/1 to indicate if the file has been withdrawn, only present if a file has been withdrawn |

| 22 | WITHDRAWN_DATE | This is generally the date the file is generated on |

| 23 | COMMENT | comment about reason for withdrawal |

| 24 | READ_COUNT | read count for the file |

| 25 | BASE_COUNT | basepair count for the file |

| 26 | ANALYSIS_GROUP | the analysis group of the sequence, this reflects sequencing strategy. For 1000 Genomes Project data, this includes low coverage, high coverage, exon targeted and exome to reflect the two non low coverage pilot sequencing strategies and the two main project sequencing strategies used by the 1000 Genomes Project. |

analysis.sequence.index files

The sequence.index file contains a list of all the sequence data produced by the project, pointers to the file locations on the ftp site and also all the meta data associated with each sequencing run.

For the phase 3 analysis the consortium has decided to only use Illumina platform sequence data with reads of 70 base pairs or longer. The analysis.sequence.index file contains only the active runs which match this criterion. There are withdrawn runs in this index. These runs are withdrawn because either: * They have insufficient raw sequence to meet our 3x non duplicated aligned coverage criteria for low coverage alignments. * After the alignment has been run they have failed our post alignment quality controls for short indels. * Contamination. * They do not meet our coverage criteria.

Since the alignment release based on 20120522, we have only released alignments based on the analysis.sequence.index

Related questions:

About variant identifiers

Answer:

All of the 1000 Genomes SNPs and indels have been submitted to dbSNP, and will have rsIDs in the main 1000 Genomes release files. The SVs have all been submitted to DGVa and have esvIDs in the main files.

If you are using some of the older working files that were used during the data gathering phase of the 1000 Genomes Project, you may find some variants with other kinds of identifiers, such as Alu_umary_Alu_###. These identifiers were created internally by the groups that did that set of particular variant calling, and are not found anywhere other than these files, as they will have been replaced by official IDs in the later files.

KGP identifiers

You may also see kgp identifiers, which were created by Illumina for their genotyping platform before some variants identified during the pilot phase of the project had been assigned rs numbers.

We do not possess a mapping of these identifiers to current rs numbers. As far as we are aware no such list exists.

Related questions:

About VCF variant files

Answer:

Variants are released in VCF format. As these have been released at different times, they are on different versions of the format - this will be indicated in the file heading. Our VCFs are multi-individual, with genotypes listed for each sample; we do not have individual or population specific VCFs.

Are all the genotype calls in the 1000 Genomes Project VCF files bi-allelic?

No. While bi-allelic calling was used in earlier phases of the 1000 Genomes Project, multi-allelic SNPs, indels, and a diverse set of structural variants (SVs) were called in the final phase 3 call set. More information can be found in the main phase 3 publication from the 1000 Genomes Project and the structural variation publication. The supplementary information for both papers provides further detail.

In earlier phases of the 1000 Genomes Project, the programs used for genotyping were unable to genotype sites with more than two alleles. In most cases, the highest frequency alternative allele was chosen and genotyped. Depth of coverage, base quality and mapping quality were also used when making this decision. This was the approach used in phase 1 of the 1000 Genomes Project. As methods were developed during the 1000 Genomes Project, it is recommended to use the final phase 3 data in preference to earlier call sets.

Related questions:

Are the IGSR variants available in dbSNP?

Answer:

When studies are published, their variant call sets are submitted to the archives (dbSNP,DGVa, EVA, etc.).

The 1000 Genomes Project SNPs and short indels were all submitted to dbSNP and longer structural variants to the DGVa.

The accessions for data sets in the archives can be found in the accompanying publications (listed alongside the data collections).

Related questions:

Are the IGSR variants available in genome browsers?

Answer:

1000 Genomes Project data is available at both Ensembl and the UCSC Genome Browser.

Ensembl provides consequence information for the variants. The variants that are loaded into the Ensembl database and have consequence types assigned are displayed on the Variation view. Ensembl can also offer consequence predictions using their Variant Effect Predictor (VEP).

You can see individual genotype information in the Ensembl browser by looking at the Individual Genotypes section of the page from the menu on the left hand side.

Related questions:

Are there any statistics about how much sequence data is in IGSR?

Answer:

We do not provide summary statistics that span the collections in IGSR. However, our index files, provided for each data collection on the FTP site, include information for each collection. The following describes the information available using the 1000 Genomes Project files as an example, however, similar files are available for the other data collections.

For raw data, a sequence.index file contains base and read counts for each of the active FASTQ files.

For the aligned data all BAM and CRAM files have BAS files associated with them. These contain read group level statistics for the alignment. We also provide this in a collected form in alignment index files. The alignment indices for the alignments of the 1000 Genomes Project data to GRCh38 are available on the FTP site. There is also an historic alignment indices directory, which contains a .hsmetrics file with the results of the Picard tool CalculateHsMetrics for all the exome alignments and summary files, which compare statistics between old and new alignment releases during the 1000 Genomes Project.

Related questions:

Can I BLAST against the sequences in IGSR?

Answer:

The 1000 Genomes raw sequence data represents more then 30,000x coverage of the human genome and there are no tools currently available to search against the complete data set. You can, however, use the Ensembl or NCBI BLAST services and then use these results to find 1000 Genomes Project variants in dbSNP.

Related questions:

Can I convert VCF files to PLINK/PED format?

Answer:

We provide a VCF to PED tool to convert from VCF to PLINK PED format. This tool has documentation for both the web interface and the Perl script.

An example Perl command to run the script would be:

perl vcf_to_ped_converter.pl -vcf ftp://ftp.1000genomes.ebi.ac.uk/vol1/ftp/release/20110521/ALL.chr13.phase1_integrated_calls.20101123.snps_indels_svs.genotypes.vcf.gz

-sample_panel_file ftp://ftp.1000genomes.ebi.ac.uk/vol1/ftp/release/20110521/phase1_integrated_calls.20101123.ALL.sample_panel

-region 13:32889611-32973805 -population GBR -population FIN

Related questions:

Can I get cell lines for IGSR samples?

Answer:

In almost all cases, cell lines are available. For most samples, cell lines are held at the Coriell Cell Repository. Samples from HGDP are available at CEPH.

Coriell hold detailed information on the 1000 Genomes Project populations and sample collection process.

Individual sample pages in the data portal include links to the repository where the cell line is available.

The main exception to the above is around 400 samples in the Gambian Genome Variation Project (GGVP), for which cell lines are not available.

Related questions:

Can I get phased genotypes and haplotypes for the individual genomes?

Answer:

Phased variant call sets are described in “Are the variant calls in IGSR phased?”.

You can obtain individual phased genotypes through either the Ensembl Data Slicer or using a combination of tabix and VCFtools allows you to sub sample VCF files for a particular individual or list of individuals.

The Data Slicer has both filter by individual and population options. The individual filter takes the individual names in the VCF header and presents them as a list before giving you the final file. If you wish to filter by population, you also must provide a panel file which pairs individuals with populations, again you are presented with a list to select from before being given the final file, both lists can have multiple elements selected.

To use tabix you must also use a VCFtools Perl script called vcf-subset. The command line would look like:

tabix -h ftp://ftp-trace.ncbi.nih.gov/1000genomes/ftp/release/20100804/ALL.2of4intersection.20100804.genotypes.vcf.gz 17:1471000-1472000 | perl vcf-subset -c HG00098 | bgzip -c /tmp/HG00098.20100804.genotypes.vcf.gz

Please also note that some studies, such as the second phase of the Human Genome Structural Variation Consortium (HGSVC), are now producing haplotype resolved asssemblies.

Related questions:

Can I get phenotype, gender and family relationship information for the individuals?

Answer:

For the 1000 Genomes Project, due to the freely available nature of the data, no phenotype information was collected for any of the samples. All donors were over 18 and declared themselves to be healthy at the time of collection. We do provide a sample spreadsheet and a pedigree file which contain ethnicity and gender for 1000 Genomes samples.

Related questions:

Can I query IGSR programmatically?

Answer:

Our data is in standard formats like SAM and VCF, which have tools associated with them. To manipulate SAM/BAM files look at SAMtools for a C based toolkit and links to APIs in other languages. To interact with VCF files look at VCFtools which is a set of Perl and C++ code.

Related questions:

Can I search the website?

Answer:

You can search the website.

Every page on {{site.url}} has a search box in the top right hand corner. This allows you to find information anywhere on the IGSR website.

Related questions:

Can I volunteer to be part of the 1000 genomes project?

Answer:

The 1000 Genomes Project is not accepting volunteers to be sequenced. More information about how samples were recruited please see the About page.

Another large scale resequencing project that does still have rounds of recruitment is the Personal Genomes Project

Related questions:

Do I need a password to access IGSR data?

Answer:

All the 1000 genomes information is freely available without passwords.

There are two main sources for our raw and analysis data our ftp site which has two mirrored locations: EBI and NCBI. These are accessible using both ftp and a udp protocol called ascp which is available freely from aspera. More information about these can be found on the data access page.

Related questions:

Do I need permission to use IGSR data in my own scientific research?

Answer:

The 1000 Genomes data is made available according to the Fort Lauderdale Agreement. The IGSR data use statement can be found on our data disclaimer page.

Related questions:

Do you have assembled FASTA sequences for samples?

Answer:

Recent projects, such as the second phase of the Human Genome Structural Variation Consortium (HGSVC) have produced assemblies. These are linked to from the page for that data collection. These are haplotype resolved assemblies. More details can be found in the accompanying publication.

The 1000 Genomes Project did not create any assemblies from the genome sequence data it generated.

The Gerstein Lab at Yale University created a diploid version of the NA12878 sequence, which is available from the Gerstein website under NA12878_diploid. When used, groups should cite AlleleSeq: analysis of allele-specific expression and binding in a network framework, Rozowsky et al., Molecular Systems Biology 7:522.

You can create a FASTA file incorporating the variants from an individual with a VCFtools Perl script called vcf-consensus.

An example set of command lines would be:

#Extract the region and individual of interest from the VCF file you want to produce the consensus from

tabix -h ftp://ftp.1000genomes.ebi.ac.uk/vol1/ftp/release/20110521/ALL.chr17.phase1_release_v3.20101123.snps_indels_svs.genotypes.vcf.gz 17:1471000-1472000 | perl vcf-subset -c HG00098 | bgzip -c > HG00098.vcf.gz

#Index the new VCF file so it can be used by vcf-consensus

tabix -p vcf HG00098.vcf.gz

#Run vcf-consensus and use --sample to apply sample-specific variants. If not given, all the variants are applied

cat ref.fa | vcf-consensus HG00098.vcf.gz --sample HG00098 > HG00098.fa

You can get more support for VCFtools on their help mailing list.

Related questions:

Do you have structural variation data?

Answer:

The 1000 Genomes Project considered structural variation (longer than 50bp in length) based on short read Illumina data in the publication by Sudmant et al. in 2015.

Structural variants are also considred in analysis of high-coverage short read data in work done by NYGC.

However, short read data has limitations for assessing structural variation. The Human Genome Structural Variation Consortium (HGSVC) applied a variety of technologies to explore their abilty to detect structural variation. This work has subsequently been expanded and other projects are using a variety of technologies to produce haplotype resolved genome assemblies.

Related questions:

How are allele frequencies calculated?

Answer:

Our standard AF values are allele frequencies rounded to two decimal places calculated using allele count (AC) and allele number (AN) values.

LDAF is an allele frequency value in the info column of our phase 1 VCF files. LDAF is the allele frequency as inferred from the haplotype estimation. You will note that LDAF does sometimes differ from the AF calculated on the basis of allele count and allele number. This generally means there are many uncertain genotypes for this site. This is particularly true close to the ends of the chromosomes.

Genotype Dosage

The phase 1 data set also contains Genotype Dosage values. This comes from Mach/Thunder, imputation engine used for genotype refinement in the phase 1 data set.

The Dosage represents the predicted dosage of the non reference allele given the data available, it will always have a value between 0 and 2.

The formula is Dosage = Pr(Het|Data) + 2*Pr(Alt|Data)

The dosage value gives an indication of how well the genotype is supported by the imputation engine. The genotype likelihood gives an indication of how well the genotype is supported by the sequence data.

Related questions:

- How do I find out information about a single variant?

- Why do some variants in the phase1 release have a zero Allele Frequency?

- How do I get a genomic region sub-section of your files?

- Can I get phased genotypes and haplotypes for the individual genomes?

- Can I get phased genotypes and haplotypes for the individual genomes?

How do I cite IGSR?

Answer:

When citing IGSR in general, please cite our Nucleic Acids Research database paper. For further details on citing specifc data sets, please see the appropriate data reuse policy and also cite any appropriate publication. Publications that we are aware of are listed alongside the data collections in our data portal.

When citing the 1000 Genomes Project in general please use the final phase 3 paper, A global reference for human genetic variation, The 1000 Genomes Project Consortium, Nature 526, 68-74 (01 October 2015) doi:10.1038/nature15393. This paper is published under the creative commons Attribution-NonCommercial-ShareAlike 3.0 Unported licence, please feel free to share and redistribute the paper appropriately.

If you have any questions, please contact us at info@1000genomes.org.

Related questions:

How do I contact you?

Answer:

If you want to ask us a question please email info@1000genomes.org

Related questions:

How do I find out about new releases?

Answer:

We announce all new data releases on the front page of our website.

You can also follow these announcements on rss and twitter. If you want to ask us a question please email info@1000genomes.org

Related questions:

How do I find out information about a single variant?

Answer:

Our VCF files contain global and super population alternative allele frequencies. You can see this in our most recent release. For multi allelic variants, each alternative allele frequency is presented in a comma separated list.

An example info column which contains this information looks like

1 15211 rs78601809 T G 100 PASS AC=3050;AF=0.609026;AN=5008;NS=2504;DP=32245;EAS_AF=0.504;AMR_AF=0.6772;AFR_AF=0.5371;EUR_AF=0.7316;SAS_AF=0.6401;AA=t|||;VT=SNP

If you want population specific allele frequencies you have three options: * For a single variant you can look at the population genetics page for a variant in the Ensembl browser. This gives you piecharts and a table for a single site. * For a genomic region you can use our allele frequency calculator tool which gives a set of allele frequencies for selected populations * If you would like sub population allele frequences for a whole file, you are best to use the vcftools command line tool.

This is done using a combination of two vcftools commands called vcf-subset and fill-an-ac

An example command set using files from our phase 1 release would look like

grep CEU integrated_call_samples.20101123.ALL.panel | cut -f1 > CEU.samples.list

vcf-subset -c CEU.samples.list ALL.chr13.integrated_phase1_v3.20101123.snps_indels_svs.genotypes.vcf.gz | fill-an-ac |

bgzip -c > CEU.chr13.phase1.vcf.gz

</pre>

Once you have this file you can calculate your frequency by dividing AC (allele count) by AN (allele number).

Please note that some early VCF files from the main project used LD information and other variables to help estimate the allele frequency. This means in these files the AF does not always equal AC/AN. In the phase 1 and phase 3 releases, AC/AN should always match the allele frequency quoted.

Lists of identifiers

You can get information about a list of variant identifiers using Ensembl’s Biomart.

This YouTube video gives a tutorial on how to do it.

The basic steps are:

- Select the Ensembl Variation Database

- Select the Homo sapiens Short Variants (SNPs and indels excluding flagged variants) dataset

- Select the Filters menu from the left hand side

- Expand the General Variant Filters section

- Check the Filter by Variant Name (e.g. rs123, CM000001) [Max 500 advised] box

- Add your list of rs numbers to the box or browse for a file which contains this list

- Click on the Results Button in the headline section

- This should provide you with a table of results which you can also download in Excel or CSV format

If you would like the coordinates on GRCh38, you should use the main Ensembl site, however if you would like the coordinates on GRCh37, you should use the dedicated GRCh37 site.

Related questions:

How do I find specific alignment files?

Answer:

The easiest way to find the alignment files you’re looking for is with the Data Portal. You can search for individuals, populations and data collections, and filter the files by data type and technologies. This will give you locations of the files, which you can use to download directly, or to export a list to use with a download manager.

You can find an index of our alignments in our alignment.index file. There are dated versions of these files and statistics surrounding each alignment release in the alignment_indicies directory. Please note with few exceptions we only keep the most recent QC passed alignment for each sample on the ftp site.

Related questions:

How do I find specific sequence read files?

Answer:

The easiest way to find the sequence files you’re looking for is with the Data Portal. You can search for individuals, populations and data collections, and filter the files by data type and technologies. This will give you locations of the files, which you can use to download directly, or to export a list to use with a download manager.

Related questions:

How do I find specific variant files?

Answer:

The easiest way to find the variant files you’re looking for is with the Data Portal. You can search for individuals, populations and data collections, and filter the files by data type and technologies. This will give you locations of the files, which you can use to download directly, or to export a list to use with a download manager.

The VCFs are by chromosome, and contain genotypes for all the individuals in the dataset. Chromosome X, Y and MT variants are available for the phase 3 variant set. The chrX calls were made in the same manner as the autosome variant calls and as such are part of the integrated call set and include SNPs, indels and large deletions, note the males in this call set are show as haploid for any chrX SNP not in the Pseudo Autosomal Region (PAR). The chrY and MT calls were made independently. Both call sets are presented in an integrated file in the phase 3 FTP directory, chrY and chrMT. ChrY has snps, indels and large deletions. ChrMT only has snps and indels. For more details about how these call sets were made please see the phase 3 paper.

Related questions:

- How do I get a genomic region sub-section of your files?

- About VCF variant files

- Can I get phased genotypes and haplotypes for the individual genomes?

- What are your filename conventions?

- How do I find specific alignment files?

- How do I find the reference genomes files?

- How do I find the most up-to-date data?

- Are the IGSR variants available in dbSNP?

How do I find the most up-to-date data?

Answer:

Reviewing the list of data collections and their publications in our data portal is a good starting point.

We also share data via our FTP site and data may be available on our FTP site sometime beofre being added to the website. Data collection directories are available on the FTP site.

In addition, to track changes on our FTP site we provide change logs and a current.tree file, which list all files present on our FTP site and any changes made to them.

Related questions:

How do I find the reference genomes files?

Answer:

Our reference data sets can be found in technical/reference/ and this includes items like the reference genome, ancestral alignments and standard annotation sets.

There is also a frozen version of the reference data used for the pilot project available in pilot_data/technical/reference

Related questions:

How do I get a genomic region sub-section of your files?

Answer:

You can get a subsection of the VCF or BAM files using the Ensembl Data Slicer tool. This tool gives you a web interface requesting the URL of any VCF file and the genomic location you wish to get a sub-slice for. This tool also works for BAM files. This tool also allows you to filter the file for particular individuals or populations if you also provide a panel file.

You can also subset VCFs using tabix on the command line, e.g.

tabix -h ftp://ftp.1000genomes.ebi.ac.uk/vol1/ftp/release/20100804/ALL.2of4intersection.20100804.genotypes.vcf.gz 2:39967768-39967768

Specifications for the VCF format, and a C++ and Perl tool set for VCF files can be found at vcftools on sourceforge

Please note that all our VCF files using straight intergers and X/Y for their chromosome names in the Ensembl style rather than using chr1 in the UCSC style. If you request a subsection of a vcf file using a chromosome name in the style chrN as shown below it will not work.

tabix -h ftp://ftp.1000genomes.ebi.ac.uk/vol1/ftp/release/20100804/ALL.2of4intersection.20100804.genotypes.vcf.gz chr2:39967768-39967768

You can subset alignment files with samtools on the command line, e.g.

samtools view -h ftp://ftp.1000genomes.ebi.ac.uk/vol1/ftp/phase1/data/HG00154/alignment/HG00154.mapped.ILLUMINA.bwa.GBR.low_coverage.20101123.bam 17:7512445-7513455

Samtools supports streaming files and piping commands together both using local and remote files. You can get more help with samtools from the samtools help mailing list

Related questions:

- Can I convert VCF files to PLINK/PED format?

- What is the coverage depth?

- How do I find out information about a single variant?

- Can I get phased genotypes and haplotypes for the individual genomes?

- What methods were used for generating alignments?

- About alignment files (BAM and CRAM)

- How do I find specific alignment files?

How do I navigate the FTP site to find the files I need?

Answer:

The easiest way to find the files you’re looking for is with the Data Portal. You can search for individuals, populations and data collections, and filter the files by data type and technologies. This will give you locations of the files, which you can use to download directly, or to export a list to use with a download manager.

Related questions:

How many individuals have been sequenced in IGSR projects and how were they selected?

Answer:

There is data from 4973 individuals in IGSR, some related.

Related questions:

How was exome and exon targetted sequencing used?

Answer:

The 1000 Genomes Project has run two different pull-down experiments. These are labelled as “exon targetted” and “exome”.

An exon targetted run is part of the pilot study which targetted 1000 genes in nearly 700 individuals. The targets for this pilot can be found in the pilot_data/technical/reference directory.

An exome run is part of the whole exome sequencing project which targetted the entirety of the CCDS gene set. The targets used for the phase 1 data release of 1092 samples can be found in technical/reference/exome_pull_down_targets_phases1_and_2; the targets for phase3 analysis can be found in technical/reference/exome_pull_down_targets/.

The phase 1 and 2 targets are intersections of the different technologies used and the CCDS gene list. For phase 3 we used using a union of two different pull-down lists: NimbleGen EZ_exome v1 and Agilent sure select v2. In phase 3 very little exome specific calling took place. Instead analysis groups called variants tending to use the low coverage and exome data together in an integrated manner.

Capture technology

Different centres have used different pull-down technologies for the Exome sequencing done for the 1000 Genomes project.

Baylor College of Medicine used NimbleGen SeqCap_EZ_Exome_v2 for its Solid based exome sequencing. For its more recent Illumina based exome sequencing it used a custom array HSGC VCRome.

The Broad Institute has used Agilent SureSelect_All_Exon_V2 (https://earray.chem.agilent.com/earray/ using ELID: S0293689).

The BGI used NimbleGen SeqCap EZ exome V1 for the phase 1 samples and NimbleGen SeqCap_EZ_Exome_v2 for phase 2 and 3 (the v1 files were obtained from BGI directly; they are discontinued from Nimblegen).

The Washington University Genome Center used Agilent SureSelect_All_Exon_V2 (https://earray.chem.agilent.com/earray/ using ELID: S0293689) for phase 1 and phase 2, and NimbleGen SeqCap_EZ_Exome v3 for phase 3

Related questions:

Is there gene expression and/or functional annotation available for the samples?

Answer:

Functional annotation

As part of our phase 1 analysis we performed functional annotation of our phase 1 variants with respect to both coding and non-coding annotation from GENCODE and the ENCODE project respectively.

This functional annotation can be found in our phase 1 analysis results directory. We present both the annotation we compared the variants to and VCF files which contain the functional consequences for each variant.

Gene expresssion

The most important available existing expression datasets involving 1000 Genomes individuals are probably the following:

- RNAseq (mRNA & miRNA) on 465 individuals (CEU, TSI, GBR, FIN, YRI)

Pre-publication RNA-sequencing data from the Geuvadis project is available through http://www.geuvadis.org

http://www.ebi.ac.uk/arrayexpress/experiments/E-GEUV-1/samples.html

http://www.ebi.ac.uk/arrayexpress/experiments/E-GEUV-2/samples.html

- RNAseq on 60 CEU individual [1]

http://www.ebi.ac.uk/arrayexpress/experiments/E-MTAB-197

- Expression arrays on about 800 HapMap 3 individuals with a lot of overlap with 1000g data [1,2]

http://www.ebi.ac.uk/arrayexpress/experiments/E-MTAB-198

http://www.ebi.ac.uk/arrayexpress/experiments/E-MTAB-264

- RNAseq for 69 YRI individuals [3]

http://www.ebi.ac.uk/arrayexpress/experiments/E-GEOD-19480

References

- Reference:Montgomery SB, Sammeth M, Gutierrez-Arcelus M, Lach RP, Ingle C, Nisbett J, Guigo R, Dermitzakis ET. Transcriptome genetics using second generation sequencing in a Caucasian population. Nature. 2010 Apr 1;464(7289):773-7. Epub 2010 Mar 10.

- Reference: Stranger,B.E S.B. Montgomery, A.S. Dimas, L. Parts, O. Stegle, C.E. Ingle, M. Sekowska, G. Davey Smith, D. Evans, M. Gutierrez-Arcelus, A. Price, T. Raj J. Nisbett, A.C. Nica, C. Beazley, R. Durbin, P. Deloukas, E.T. Dermitzakis. Patterns of cis regulatory variation in diverse human populations. PLoS Genetics in press

- Reference: Pickrell JK, Marioni JC, Pai AA, Degner JF, Engelhardt BE, Nkadori E, Veyrieras JB, Stephens M, Gilad Y, Pritchard JK. Understanding mechanisms underlying human gene expression variation with RNA sequencing. Nature. 2010 Apr 1;464(7289):768-72. Epub 2010 Mar 10.

Related questions:

There is a corrupt file on your ftp site.

Answer:

As many of our files are very large >5GB they can become corrupt during download.

Before emailing us to let us know about a problem file the first thing you need to check is the md5 checksum of your file to see if it matches our records in the current.tree file.

If the size and or the md5 checksum don’t match then you need to attempt to download the file again.

If the size of the file and the md5 check sum matches what is in the current.tree then please email info@1000genomes.org to let us know which file has a problem.

Related questions:

Was HLA Diversity studied in IGSR?

Answer:

HLA diversity is not something which was studied by the 1000 Genomes Project directly. However, groups have looked at the HLA diversity of the samples in the 1000 Genomes Project.

2018 data

The most recent of these studies was published by Laurent Abi-Rached, Julien Paganini and colleagues in 2018 and covers 2,693 samples from the work of the 1000 Genomes Project. Details of the study and data used in this work are available via the publication and the HLA types are available on our FTP site at ftp://ftp.1000genomes.ebi.ac.uk/vol1/ftp/data_collections/HLA_types/.

2014 data

The FTP site also hosts data from an earlier study by Pierre-Antoine Gourraud, Jorge Oksenberg and colleages at UCSF who carried out an HLA typing assay on DNA sourced from Coriell for 1000 Genomes samples. This earlier study looks at only the 1,267 samples that were available at that time.

The earlier work assessing HLA Diversity is publised in “HLA diversity in the 1000 Genome Dataset”, with data available from the 1000 Genomes FTP site in ftp://ftp.1000genomes.ebi.ac.uk/vol1/ftp/technical/working/20140725_hla_genotypes/.

Related questions:

What are your filename conventions?

Answer:

Our filename conventions depend on the data format being named. This is described in more detail below.

FASTQ

Our sequence files are distributed in gzipped fastq format

Our files are named with the SRA run accession E?SRR000000.filt.fastq.gz. All the reads in the file also hold this name. The files with _1 and _2 in their names are associated with paired end sequencing runs. If there is also a file with no number it is name this represents the fragments where the other end failed qc. The .filt in the name represents the data in the file has been filtered after retrieval from the archive. This filtering process is described in a README.

VCF

Our variant files are distributed in vcf format, a format initially designed for the 1000 Genomes Project which has seen wider community adoption.

The majority of our vcf files are named in the form:

ALL.chrN|wgs|wex.2of4intersection.20100804.snps|indels|sv.genotypes.analysis_group.vcf.gz

This name starts with the population that the variants were discovered in, if ALL is specifed it means all the individuals available at that date were used. Then the region covered by the call set, this can be a chromosome, wgs (which means the file contains at least all the autosomes) or wex (this represents the whole exome) and a description of how the call set was produced or who produced it, the date matches the sequence and alignment freezes used to generate the variant call set. Next a field which describes what type of variant the file contains, then the analysis group used to generate the variant calls, this should be low coverage, exome or integrated and finally we have either sites or genotypes. A sites file just contains the first eight columns of the vcf format and the genotypes files contain individual genotype data as well.

Release directories should also contain panel files which also describe what individuals the variants have genotypes for and what populations those individuals are from.

Related questions:

What do the population codes mean?

Answer:

Each three letter Population code represents a different population, CEU means Northern Europeans from Utah and TSI means Tuscans from Italy. There is a summary of all these codes both in a readme on the ftp site and in the Data Portal.

Related questions:

What is the coverage depth?

Answer:

The Phase 1 integrated variant set does not report the depth of coverage for each individual at each site. We instead report genotype likelihoods and dosage. If you would like to see depth of coverage numbers you will need to calculate them directly.

The bedtools suite provides a method to do this.

genomeCoverageBed is a tool which can provide a bed file which specifies coverage for every base in the genome and intersectBed which will provide an intersection between two vcf/bed/bam files.

These commands also require samtools, tabix and vcftools to be installed.

An example set of commands would be:

samtools view -b ftp://ftp.1000genomes.ebi.ac.uk/vol1/ftp/data/HG01375/alignment/HG01375.mapped.ILLUMINA.bwa.CLM.low_coverage.20120522.bam 2:1,000,000-2,000,000 | genomeCoverageBed -ibam stdin -bg > coverage.bg

This command gives you a bedgraph file of the coverage of the HG01375 bam between 2:1,000,000-2,000,000:

tabix -h http://ftp.1000genomes.ebi.ac.uk/vol1/ftp/phase1/analysis_results/integrated_call_sets/ALL.chr2.integrated_phase1_v3.20101123.snps_indels_svs.genotypes.vcf.gz 2:1,000,000-2,000,000 | vcf-subset -c HG01375 | bgzip -c > HG01375.vcf.gz

This command gives you the vcf file for 2:1,000,000-2,000,000 with just the genotypes for HG01375.

To get the coverage for all those sites you would use:

intersectBed -a HG01375.vcf.gz -b coverage.bg -wb > depth_numbers.vcf

You can find more information about bed file formats please see the Ensembl File Formats Help.

For more information you may wish to look at our documentation about data slicing.

Related questions:

What sequencing platforms and methods were used by different projects within IGSR?

Answer:

Data in IGSR spans a wide range of technologies.

The technologies used in recent work are listed in the data portal and are visible in ‘Technology view’, although this does not include older technologies used in, for example, the pilot phase of the 1000 Genomes Project. The portal also enables filtering of data sets by technology.

The most common form of data is Illumina genomic sequence data. However, the number of samples with long read data from PacBio and Oxford Nanopore is growing.

Further detail on the technologies present in our collection can be found in the accompanying publications for the given collection.

Related questions:

What tools can I use to download IGSR data?

Answer:

The 1000 Genomes data is available via ftp, http, Aspera and Globus. Any standard tool like wget or ftp should be able to download from our ftp or http mounted sites. There are no official torrents of the 1000 Genomes Project data sets.

How to download files using Aspera

Download Aspera

Aspera provides a fast method of downloading data. To use the Aspera service you need to download the Aspera connect software. This provides a bulk download client called ascp.

Command line

For the command line tool ascp, for versions 3.3.3 and newer, you need to use a command line like:

ascp -i bin/aspera/etc/asperaweb_id_dsa.openssh -Tr -Q -l 100M -P33001 -L- fasp-g1k@fasp.1000genomes.ebi.ac.uk:vol1/ftp/release/20100804/ALL.2of4intersection.20100804.genotypes.vcf.gz ./

For versions 3.3.2 and older, you need to use a command line like:

ascp -i bin/aspera/etc/asperaweb_id_dsa.putty -Tr -Q -l 100M -P33001 -L- fasp-g1k@fasp.1000genomes.ebi.ac.uk:vol1/ftp/release/20100804/ALL.2of4intersection.20100804.genotypes.vcf.gz ./

Note, the only change between these commands is that for newer versions of ascp asperaweb_id_dsa.openssh replaces asperaweb_id_dsa.putty. You can check the version of ascp you have using:

ascp --version

The argument to -i may also be different depending on the location of the default key file. The command should not ask you for a password. All the IGSR data is accessible without a password but you do need to give ascp the ssh key to complete the command.

Files on the ENA FTP

Some of the data we provide URLs for is hosted on the ENA FTP site. ENA provide information on using Aspera with their FTP site.

As an example of downloading a file from ENA, you could use a command line like:

ascp -i bin/aspera/etc/asperaweb_id_dsa.openssh -Tr -Q -l 100M -P33001 -L-

era-fasp@fasp.sra.ebi.ac.uk:/vol1/fastq/ERR008/ERR008901/ERR008901_1.fastq.gz ./

Key files

If you are unsure of the location of asperaweb_id_dsa.openssh or asperaweb_id_dsa.putty, Aspera provide some documentation on where these will be found on different systems.

Ports

For the above commands to work with your network’s firewall you need to open ports 22/tcp (outgoing) and 33001/udp (both incoming and outgoing) to the following EBI IPs:

- 193.62.192.6

- 193.62.193.6

- 193.62.193.135

If the firewall has UDP flood protection, it must be turned off for port 33001.

Browser

Our aspera browser interace no longer works. If you wish to download files using a web interface we recommend using the Globus interface we present. If you are previously relied on the aspera web interface and wish to discuss the matter please email us at info@1000genomes.org to discuss your options.

How to download 1000 Genomes data with Globus Online?

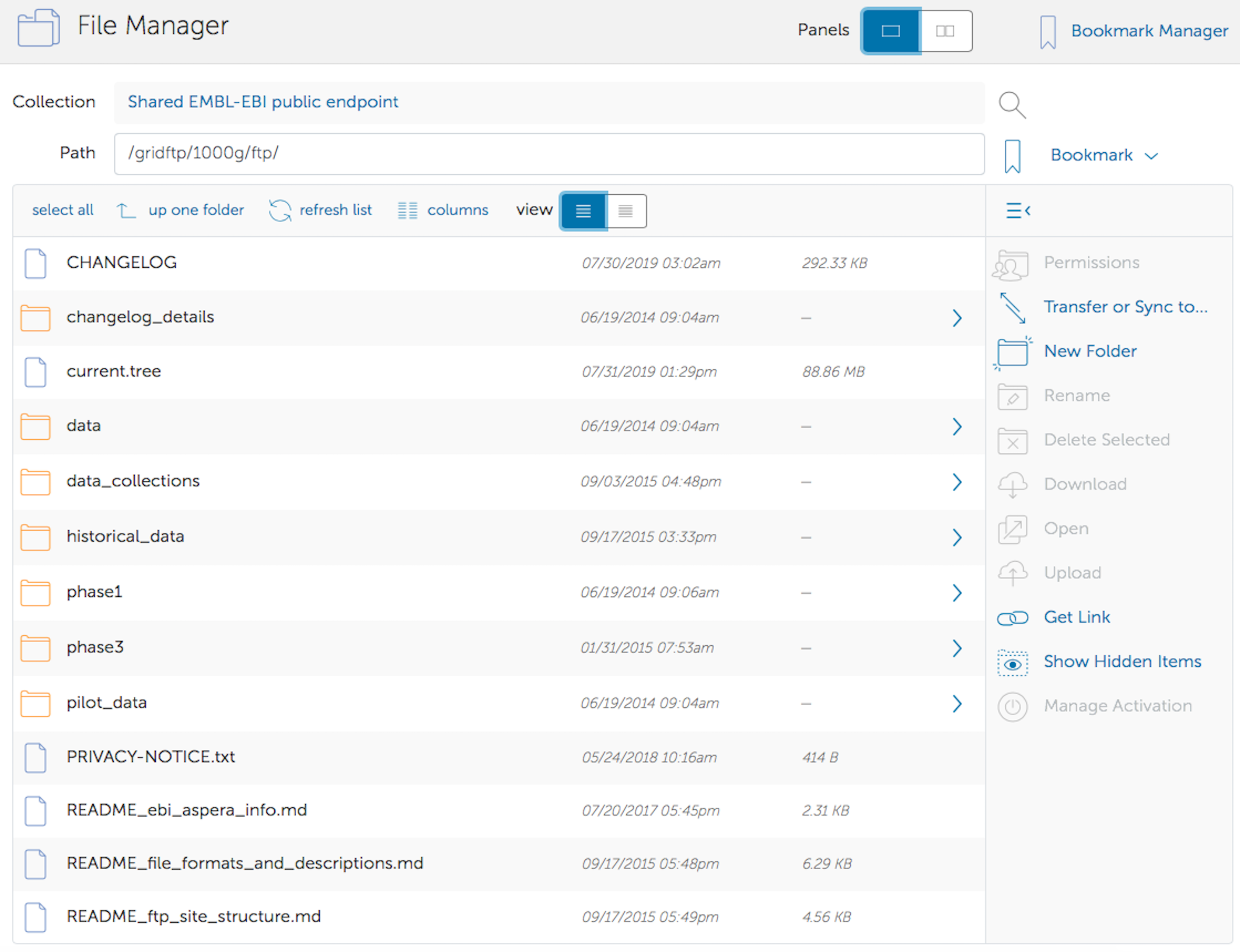

The 1000 Genomes FTP site is available as an end point in the Globus Online system. In order to access the data you need to sign up for an account with Globus via their signup page. You must also install the Globus Connect Personal software and setup a personal endpoint to download the data too.

The 1000 Genomes data is hosted at the EMBL-EBI end point called “Shared EMBL-EBI public endpoint”. Data from our FTP site can then be found under the 1000g directory within the EMBL-EBI public end point.

When you have setup your personal end point you should be able to start a transfer using their web interface.

The Globus website has support for setting up accounts, and installing the globus personal connect software.

Can I get 1000 Genomes data on the Amazon Cloud?

At the end of the 1000 Genomes Project, a large volume of the 1000 Genomes data (the majority of the FTP site) was available on the Amazon AWS cloud as a public data set.

At the end of the 1000 Genomes Project, the IGSR was established and the FTP site has been further developed since the conclusion of the 1000 Genomes Project, adding additional data sets. The Amazon AWS cloud reflects the data as it was at the end of the 1000 Genomes Project and does not include any updates or new data.

You can find more information about how to use the data in the Amazon AWS cloud on our AWS help page.

Related questions:

What types of genotyping data do you have?

Answer:

The 1000 genomes project has multiple sets of high density genotype information on both Illumina and Affymetrix Platforms.

Affy 6.0 genotype

Coriel has carried out Affy 6.0 genotyping on many of the samples which are part of the NHGRI Cell line catalog.

These files are available on our FTP site in release/20130502/supporting/hd_genotype_chip/

Axiom genotype

The Affymetrix Axiom Exome chip was used to genotype some samples for the phase 1 analysis. There are genotypes for 1248 1000 Genomes samples from the Affy 6 chip available in phase1/analysis_results/supporting/axiom_genotypes from the Axiom Exome Chip

Omni genotype

Both the Sanger Institute and the Broad Institute have carried on genotyping of 1000 Genomes and HapMap samples on the Omni Platform. The most recent set of Omni genotypes can be found in the phase 3 release directory release/20130502/supporting/hd_genotype_chip/ These contain GRCh37 based vcf files for the chip, and normalised and raw intensity files.

The ShapeIt2 scaffolds for these data, which were used in the phase 3 haplotype refinement project, can also be found in the phase 3 supporting directory release/20130502/supporting/shapeit2_scaffolds/.

Related questions:

What was the source of the DNA for sequencing?

Answer:

For the 1000 Genomes Project, the early samples were taken from the HapMap project and these all sourced their DNA from cell line cultures but some libraries were produced from blood.

The sample spreadsheet for the 1000 Genomes Project has annotation about the EBV coverage and the annotated sample source of the sequencing data in columns 59 and 60 of the 20130606_sample_info.txt

This spreadsheet gives the aligned coverage of EBV and then the annotation if Coriell stated the sample was sourced from blood. Please note some samples were sequenced both using sample from blood and LCL transformed cells but this data was not analysed independently so the EBV coverage will be high. Also some samples with very low EBV coverage (ie ~1x) may be from blood but just indicate an endogenous infection of EBV in the individual sampled.

For studies other than the 1000 Genomes Project, commonly the DNA is derived from cell lines as the original blood samples are limited. Further details, however, can be found by consulting the publications.

Related questions:

Where can I find a list of the sequencing and analysis done for each individual?

Answer:

Our data portal has a page for each sample. At the bottom of the page, the various data collections that the sample is present in are listed in tabs. Each tab then lists the available files for that sample, including seqeunce data, genotype arrays, alignments and VCFs.

An example is the page for NA12878. Sample IDs can be entered in the search box to locate a given sample.

To understand the data available for larger groups of samples, the samples and population tabs of the portal can be used to explore available data.

Related questions:

Where can I get consequence annotations for the IGSR variants?

Answer:

The final 1000 Genomes phase 3 analysis calculated consequences based on GENCODE annotation and this can be found in the directory: release/20130502/supporting/functional_annotation/

Ensembl can also provides consequence information for the variants. The variants that are loaded into the Ensembl database and have consequence types assigned and displayed on the Variation view. Ensembl can also offer consequence predictions using their Variant Effect Predictor (VEP).

Related questions:

Which reference assembly do you use?

Answer:

The reference assembly the 1000 Genomes Project has mapped sequence data to has changed over the course of the project.

For the pilot phase we mapped data to NCBI36. A copy of our reference fasta file can be found on the ftp site.

For the phase 1 and phase 3 analysis we mapped to GRCh37. Our fasta file which can be found on our ftp site called human_g1k_v37.fasta.gz, it contains the autosomes, X, Y and MT but no haplotype sequence or EBV.

Our most recent alignment release was mapped to GRCh38, this also contained decoy sequence, alternative haplotypes and EBV. It was mapped using an alt aware version of BWA-mem. The fasta files can be found on our ftp site.

Related questions:

Why are the coordinates of some variants different to what is displayed in other databases?

Answer:

Data from the 1000 Genomes Project has now been analysed multiple times and on different reference assemblies. In addition, further data sets have been generated and analysed. These studies, therefore, use different data, different reference assemblies and different methodologies, which can lead to different variant calls being made.

The studies that IGSR makes data available for are listed with their accompanying publications in our data portal. The publications can provide further details.

Related questions:

Why are there chr 11 and chr 20 alignment files, and not for other chromosomes?

Answer:

The chr 11 and chr 20 alignment files are put in place to give the 1000 Genomes analysis group a small section of the genome to run test analyses on before committing to a particular strategy to run across the whole genome. Everything in the chr 11 and chr 20 files is also represented in the mapped bam file. To get a complete view of what data we aligned you only need to download the mapped and unmapped bams, the chr 11 and chr 20 bams are there as a convenience to the analysis group.

Related questions:

Why can't I find one of your variants in another database?

Answer:

As is described in “Why are the coordinates of some variants different to what is displayed in other databases?”, different analyses may produce different results.

The publications accompanying the data collections list the accessions for the variant calls in the variation archives.

Related questions:

Why do some of your vcf genotype files have genotypes of ./. in them?

Answer:

Our August 2010 call set represents a merge of various different independent call sets. Not all the call sets in the merge had genotypes associated with them, as this merge was carried out using a predefined rules which has led to individuals or whole variant sites having no genotype and this is described as ./. in vcf 4.0. In our November 2010 call set and all subsequent call sets all sites have genotypes for all individuals for chr1-22 and X.

Related questions:

Why does a tabix fetch fail?

Answer:

There are two main reasons a tabix fetch might fail.

All our VCF files using straight intergers and X/Y for their chromosome names in the Ensembl style rather than using chr1 in the UCSC style. If you request a subsection of a VCF file using a chromosome name in the style chrN as shown below it will not work.

tabix -h ftp://ftp.1000genomes.ebi.ac.uk/vol1/ftp/release/20100804 ALL.2of4intersection.20100804.genotypes.vcf.gz chr2:39967768-39967768

Also tabix does not fail when streaming remote files but instead just stops streaming. This can lead to incomplete lines with final rows with unexpected numbers of columns when trying to stream large sections of the file. The only way to avoid this is to download the file and work with it locally.